I have been in touch and working closely with Large Language Models since they became a thing with ChatGPT. I have built a lot of applications so far, mostly in the RAG (Retrieval Augmented Generation) space.

First of all this is an amazing piece of tech. It has potential to actually replace a lot of human beings from their jobs. And when I say potential, I mean it will start replacing humans soon. And the biggest huddle is the LLM Context Window.

If you have been working in this space, you would probably know that LLMs suck at long length conversations. No matter how hard you try after a while the conversation becomes fruitless and it starts to go all over the place. That is because it has a limited context memory unlike human beings. It can only remember a few words at a time. Don’t get confuse with the knowledge it has (it is being trained on the entire internet) so it knows quite a lot. But at the time of inference it can only remember a very small part of our conversation and it runs out soon.

I’m attempting to play around in this space. Trying ways to make it efficient. I’m not a scientist officially but I like to dig into research in my free time and that’s why I’ve been doing.

First let me talk about the challenges that I was facing and then will dive deeper into how I solved it (kinda). Even before that, let me put down my goal so its clear what I’m working on.

My Goal is to build a generic agent that could make use of the tools to solve user’s query

Now that the goal is clear, let’s begin with the first problem.

Interacting with the LLM and stating how you want it to behave

The only way to communicate with LLM is TEXT.

You send text and provide the instructions to it about the output and prays that it will stick to the said output. The first problem encounter is the PROMPT construction. To make LLM work in the way you want it to.

Let’s say you have constructed the correct prompt which you will send it to LLM every time with the user’s query.

Now, this prompt could be very simple or very complex we don’t know and quite literally we don’t care.

Let’s say user wants to combine the insights from multiple articles from different websites to write a 2000 words essay.

So user prompts with:

Read the following articles and gather the insights to write a 2000 words essay:

- https://bemyaficionado.com/is-the-cosmos-a-vast-computation/

- https://bemyaficionado.com/building-semantic-search-for-e-commerce-using-product-embeddings-and-opensearch/

- https://www.anthropic.com/news/tracing-thoughts-language-modelNow if I send this directly to a raw LLM, it will provide me the essay but that essay will have nothing from the given articles because it doesn’t actually go read the articles instead guess it from the url. Now, I’m not talking about the ChatGPT or Claude or any other latest platforms because they have integrated the tools already to their LLMs but if you can still access GPT3 then you will know what I mean.

So challenge number 1

Problem 1 – How to provide tools to LLM?

To provide tools to the LLM you will have to instruct it. Let’s stick to the above example, when user wants to combine insights from different articles. This time I won’t actually send the raw query to the LLM, I will enhance it with available tools. So I would send a prompt like the following:

You are a genius assistant who performs tasks to answer user's query in the best possible way. You have access to tools which you can use to perform tasks.

# AVAILABLE TOOLS

1. fetch_webpage(url): Fetches the contents of the given url

2. send_response(text): Sends the response back to the user

# OUTPUT FORMAT

```json

{

"thought": "string",

"tool": {

"name": "<name of the tool from above>",

"params": {

"url": "<url of the page>"

}

}

}

```

User Current Query

{user.query}

Now you see the prompt has grown larger where I’m instructing the LLM what to do and then passing the user query. At this point, you hope that the prompt is good enough that LLM will understand and provide the correct response. This is what I got in return:

Here is my initial thought process for this task:

```json

{

"thought": "To gather insights from the three articles and write a 2000 word essay, I will need to read the contents of each webpage first. I can use the fetch_webpage tool to fetch the content of each URL.",

"tool": {

"name": "fetch_webpage",

"params": {

"url": "https://bemyaficionado.com/is-the-cosmos-a-vast-computation/"

}

}

}

```It is good enough in the first try. But this is the response user wants to see. So comes the next part.

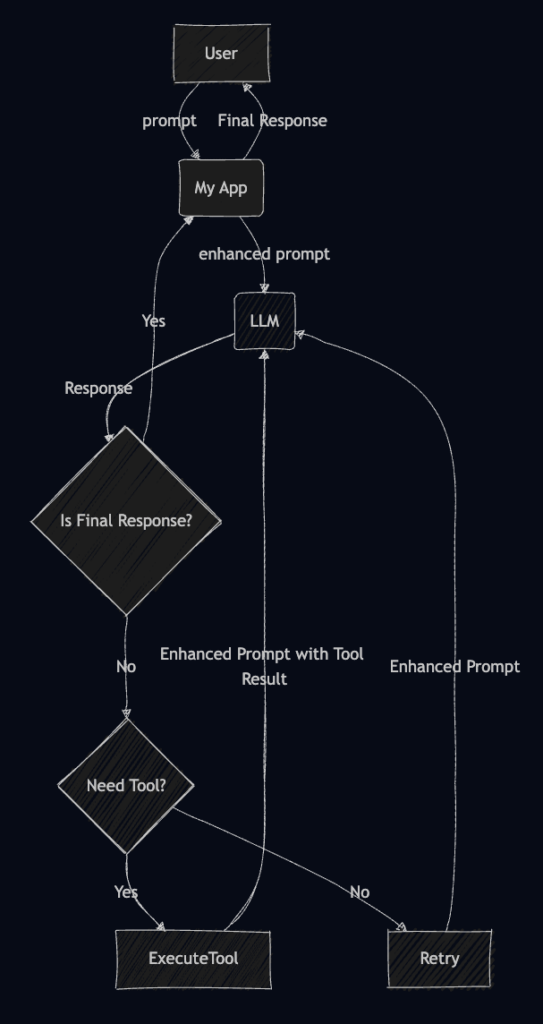

Process the LLM response, parse the provided json to get the tool name and parameters. Invoke those tools with the provided parameters and then send back the prompt with the tools’ response. A simple workflow looks like this:

Soon we hit with our problem of Context Memory. A webpage contains a lot of text and here we are talking about combining text from different articles. This will exhaust our context memory very soon and the LLM will error out. You can’t feed the entire content to it at once. I wish we could but we can’t. It is very limited in its working. I’m using Claude 3 (legacy) and it comes with 200,000 token window length. There is no way my LLM can perform this simple task.

Problem 2 – How to manage the context window size?

Wait a minute… this is the same problem that we faced while managing CPU L1 cache memory. We provide only the current task variables to it and store everything to a larger memory. RAM in CPU case, file in my case.

So, I grabbed the webpage and stored it in a temporary file and passed the file path to the LLM.

This meant I will have to provide a way for the LLM to access the file content at will.

Remember the only way to interact with the LLMs are prompts. And at any point we can’t forget about our goal. The goal that has been set by the user. And also, it needs to keep track of its own progress. As in how much content has been processed and what is remaining to be processed.

The solution was to provide it with another tool that it can use to store its intermediate results in the form of observations into a temp storage and retrieve later. Also, this required that it can access parts of the file. And it gets difficult to provide each functionality in a tool for it to access it. So, I added another tool, to let it execute its own code to read the file however it wants.

So, now I had a total of 4 tools in my repository:

- Python Code Runner – this tool takes in code snippet and executes

- Write to temp file – this tool takes the text and writes it to the temporary file and provides the file path to process

- Send Response – Sends the response to user

- Fetch Webpage – Fetch and stores the response in a file and provides the file path

This is how my enhanced prompt template looks now:

{SYSTEM_INSTRUCTIONS}

{AVAILABLE TOOLS}

{TOOL USAGE EXAMPLES}

One shot examples so it knows how to return the response

{TOOL OBSERVATIONS}

Result from each tool usage

{CONVERSATION HISTORY}

Without this you cannot ask next relevant question in the sequence of conversation

{CURRENT USER QUERY}

{user.query}

Believe it or not once I orchestrated this flow, it worked quite well for most parts. Until I hit another wall. That’s the same wall of Context Memory Management.

Now, it is working well for small tasks where you do not need to perform a lot of actions. Because as the count of actions or tool usage increases, it requires more space to store the results of those tools and this quickly fills up memory. The technique I used to work it out is to store the last “n” number of tool result and delete others. But then you hit a wall where the result of tool 1 is required in the future action m.

What I learned?

With these exercises, I’m learning ways to manage the memory better. Make the agent more aware and focussed into solving user query. I’m trying to build a memory management framework or architecture for LLMs so that they can fluently access and store information without losing the goal at sight.

Next I’m thinking about dividing the prompt into actual physical memory stores like we have in our brains. A storage and retrieval for Semantic Memory (Facts and Knowledge), Episodic Memory (Past Experiences), Procedural Memory (System Instructions) so better manage them as a whole.

This way I think I can keep the overall prompt within context window limit and provide a quality data that is required at the time.

Leave a Reply